In the previous post, we conducted a basic WaterPaths tutorial in which we ran a simulation-optimization of the North Carolina Research Triangle test case (Trindade et al, 2019); across 1000 possible futures, or states of the world (SOWs). To briefly recap, the Research Triangle test case consists of three water utilities in Cary (C), Durham (D) and Raleigh (R). Cary is the main supplier, having a water treatment plant of its own. Durham and Raleigh purchase water from Cary via treated transfers.

Having obtained the .set file containing the Pareto-optimal decision variables and their respective performance objective values, we will now walk through the .set file processing and visualize the decision variables and performance objective space.

Understanding the .set file

First, it is important that the .set file makes sense. The NC_output_MS_S3_N1000.set file should have between 30-80 rows, and a total of 35 columns. The first 20 columns contain values of the decision variables. Note that only Durham and Raleigh have water transfer triggers as they purchase treated water from Cary.

- Restriction trigger, RT (C, D, R)

- Transfer trigger, TT (D, R)

- Jordan Lake allocation, JLA (C, D, R)

- Reserve fund contribution as a percentage of total annual revenue, RC (C, D, R)

- Insurance trigger, IT (C, D, R)

- Insurance payments as a percentage of total annual revenue, IP (C, D, R)

- Infrastructure trigger, INF (C, D, R)

The last 15 columns contain the objective values for the following performance objectives of all three utilities:

- Reliability (REL) to be maximized

- Restriction frequency (RF) to be minimized

- Infrastructure net present cost (INF_NPC) to be minimized

- Peak financial cost of drought mitigation actions (PFC) to be minimized

- Worst-case financial cost of drought mitigation actions (WCC) to be minimized

This reference set needs to be processed to output a .csv file to enable reevaluation for robustness analysis. To do so, run the post_processing.py file found in this GitHub repository in the command line:

python post_processing.pyIn addition to post-processing the optimization output files, this file also conduct regional minimax operation, where each regional performance objective is taken to be the objective value of the worst-performing utility (Gold et al, 2019).

This should output two files:

- NC_refset.csv No header row. This is the file that will be used to run the re-evaluation for robustness analysis in the next blog post.

- NC_dvs_objs.csv Contains a header row. This file that contains the labeled decision variables and objectives, including the minimax regional performance objectives. Will be used for visualizing the reference set’s decision variables and performance objectives.

Visualizing the reference set

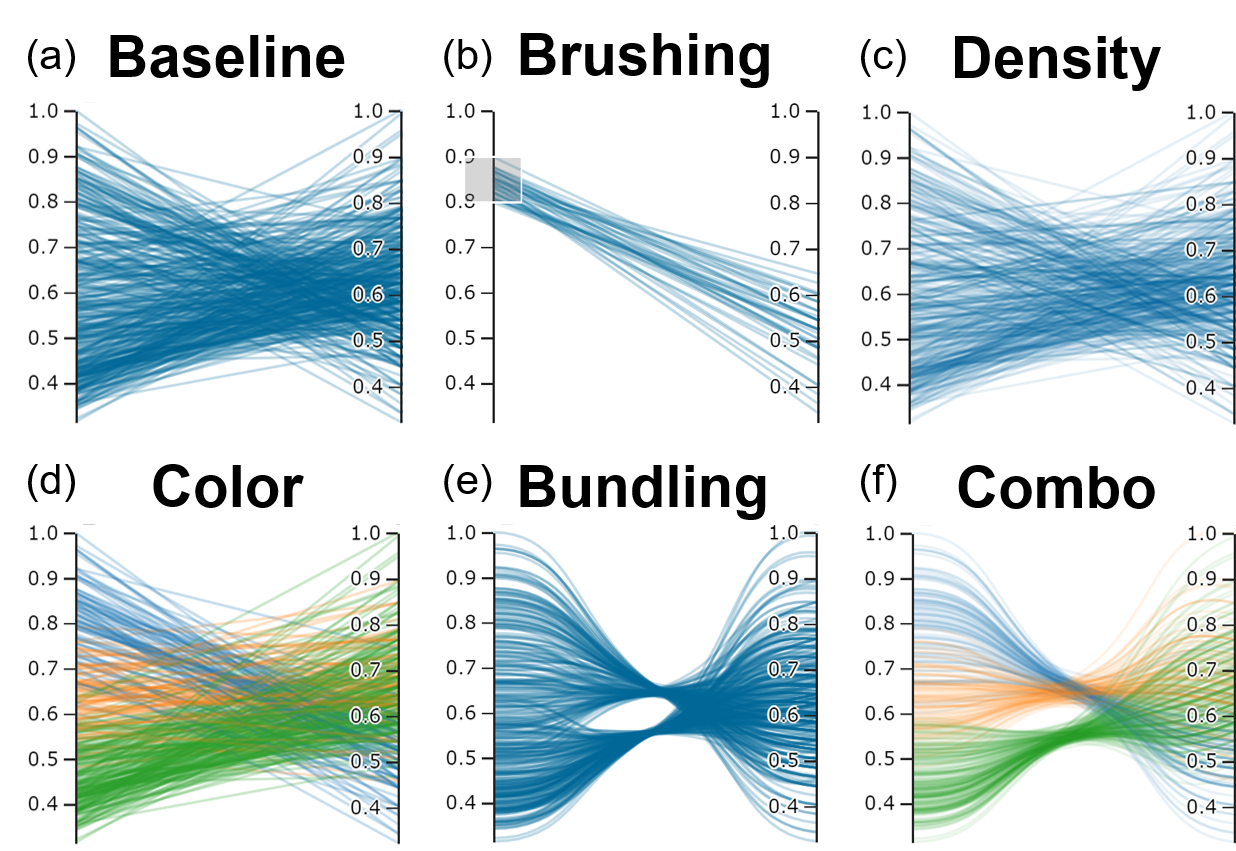

Due to the higher number of decision variables, we utilize parallel axis plots to identify how varying the decision variables can potentially affect certain performance objectives. Here, we use the regional reliability performance objective, REL, as an example. Figure 1 below demonstrates how all decision variables vary with regional reliability.

From Figure 1, most solutions found via the optimization conducted in the previous blog post seem to have relatively high reliability across the full range of decision variable values. It is unclear as to how each decision variable might affect regional reliability. It is thus more helpful to identify specific sets of decision variables (or policies) that enable the achievement of reliability beyond a certain threshold.

With this in mind, we assume that all members of the Triangle require that their collective reliability be at least 98%. This results in the following figure:

Figure 2 has one clear takeaway: Pareto-optimality does not indicate satisfactory performance. In addition, setting this threshold make the effects of each decision variable clearer. It can be seen that regional reliability is significantly affected by both Durham and Raleigh’s infrastructure trigger (INF). Desirable levels of regional reliability can be achieved when Durham sets a high INF value. Conversely, Raleigh can set lower INF values to benefit from satisfactory reliability. Figure 2 also shows having Durham set a high insurance trigger (IT) may benefit the regional in terms of reliability.

However, there are caveats. Higher INF and IT values for Durham implies that the financial burden of investment and insurance payments are being borne by Raleigh and Cary, as Durham is able to withstand more risk without having to trigger an investment or infrastructure payment. This may affect how each member utility perceives their individual risks and benefits by forming a cooperative contract.

The code to plot these figures can be found under ‘refset_parallel.py’ in the repository.

Robustness analysis and what’s next

So how is setting a threshold value of regional reliability significant?

Within the MORDM framework, robustness is defined using a multivariate satisficing metric (Gold et al, 2019). Depending on the requirements of the stakeholders, a set of criteria is defined that will then be used distinguish between success (all criteria are met) and failure (at least one criteria is not met). Using these criteria, the rest of Pareto-optimal policies are simulated across a number of uncertain SOWs. Each policy’s robustness is then represented by the percent of SOWs in which it meets the minimum performance criteria that has been set.

In this post, we processed the reference set and visualized its decision variable space with respect to each variable’s effect on the reliability performance objective. A similar process can be repeated across all utilities for all performance objectives.

Using the processed reference set, we will conduct multi-criterion robustness analysis using two criteria:

- Regional reliability should be at least 98%

- Regional restriction frequency should be less than or equal to 20%

We will also conduct sensitivity analysis to identify the the decision variables that most impact regional robustness. Finally, we will conduct scenario discovery to identify SOWs that may cause the policies to fail.

References

Gold, D. F., Reed, P. M., Trindade, B. C., & Characklis, G. W. (2019). Identifying actionable compromises: Navigating multi‐city robustness conflicts to discover cooperative safe operating spaces for regional water supply portfolios. Water Resources Research, 55(11), 9024–9050. https://doi.org/10.1029/2019wr025462

Trindade, B. C., Reed, P. M., & Characklis, G. W. (2019). DEEPLY UNCERTAIN PATHWAYS: Integrated multi-city Regional Water Supply Infrastructure Investment and portfolio management. Advances in Water Resources, 134, 103442. https://doi.org/10.1016/j.advwatres.2019.103442